Explore the Role of Data Access in the Lifecycle of Game Data Services

Introduction

In the lifecycle of game data services, data access serves as the basic service in the data service system iData, providing data support for upper-level applications such as data processing analysis and data mining. Data access is the starting point of data services, offering users various data services including a unified game log collection platform (Tlog), operation data management platform (ODM), metadata management and data quality monitoring (iMeta), data transmission (DT/TDBank), operation and maintenance data extraction, and operation and maintenance foundation.

The position of data basic services in the iData data service system is as follows:

The provided service content includes:

Ø Log access: Providing unified game log access and log storage services for various businesses;

Ø Data transmission: Providing full-process access services for data storage in data warehouses for various businesses, and providing a unified data source for subsequent data usage;

Ø Data monitoring: Providing comprehensive, real-time data monitoring services for the data flow in various businesses;

Ø Data extraction: Providing personalized, highly real-time data extraction services for various businesses;

Ø Basic operation and maintenance: Providing basic operation and maintenance services for the iData data service system;

Ø Process guarantee: Refers to the guidance and supervision of the execution of the above-mentioned activities in terms of processes, ensuring orderly and effective execution of activities.

The following is a detailed introduction to the components and workflow of the iData basic service layer, allowing everyone to understand the role played by data access in the game lifecycle.

Unified game log collection platform (Tlog)

Tlog is Tencent's unified game log collection platform, serving as the data source for game transaction logs entering the data warehouse. It standardizes the unified access of game logs and simplifies the operation of game logs.

1. Log processing system

Ø GameSvr writes logs to Tlogd via UDP;

Ø Tlog writes log content into text files;

Ø LogTool reads information from text files, goes through protocol conversion, and stores it in LogDB;

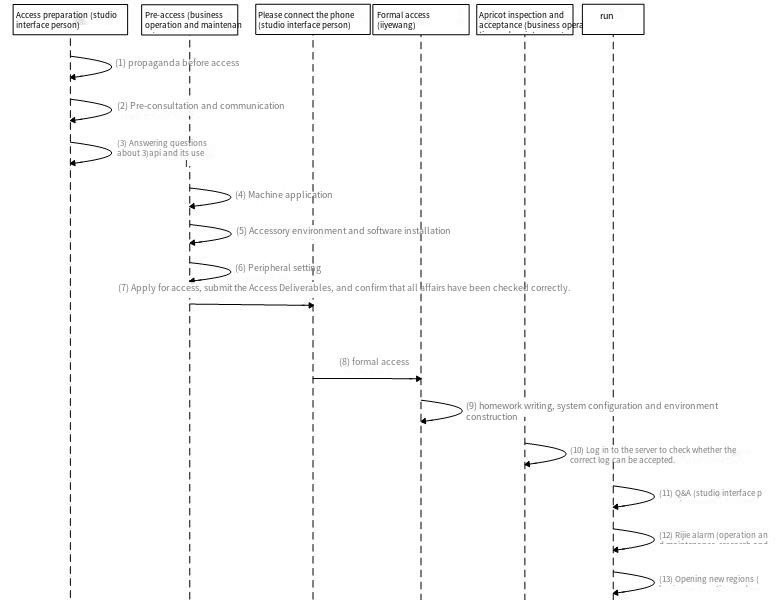

2. Tlog access sequence diagram

1) Access preparation: Mainly serving game development, guiding API access matters;

2) Pre-access: The operation and maintenance side prepares hardware resources and software environment according to Tlog standards;

3) Apply for access: Apply for deployment environment and access to the Tlog management platform ODM system;

4) Formal access: Accept and complete access;

5) Inspection and acceptance: Verify the access situation;

6) Operation: Ensure the normal operation of the game.

3. Log standardization access

1) Access method: Using Tlog API or UDP;

2) Access protocol: Using XML protocol to describe the log structure;

3) Self-check: Through the Checklog automated inspection tool, game developers can check the correctness of log content and XML files by themselves;

4) Access cycle: New business access can be completed within 1 hour, and new large regions can be completed within 5 minutes;

5) Log content: Output game logs according to the "Tencent Interactive Entertainment Game Access Log Content Specification", for example, character creation logs.

4. Operation Data Management Platform (ODM)

ODM serves as a unified platform for Tlog access, operation, and management, integrating a series of functions such as business management, Tlogd_server server cluster management, alarm monitoring, change management, and self-service operation tools, greatly facilitating Tlog access and operation.

Data Quality Monitoring Service (iMeta)

Metadata management mainly provides data quality indicator monitoring and data map services in the process of game data generation to landing and TDW distributed centralized circulation.

1. What is monitored in data monitoring?

Data flow quality indicator monitoring, including data flow volume, latency, completeness, correctness, and other indicators.

Currently, the coverage of monitoring services is 5-6 star businesses, with a coverage rate of 100%. At the same time, data is monitored hierarchically for different businesses, with key data being the focus of monitoring. Also, optimizations for alarm convergence are carried out regarding monitoring alarms, mainly from event relevance, time relevance, process relevance, and data operation event feedback perspectives.

The current completeness and correctness threshold for monitoring Tencent game data entering the data warehouse is 0.999; the standard is three nines. (Issues generated by the game logs themselves are not counted)

The dimensions we monitor are as follows:

Ø Data completeness - whether there are any abnormalities in the number of data rows during transmission;

Ø Data correctness - whether the data content is abnormal: row and column shifts, Chinese encoding, etc.;

Ø Data transmission latency - currently, it is required to ensure that the time from data generation to formal entry into the data warehouse is <2 hours;

Ø Data anomalies - machine and process anomalies in various stages such as data generation, transmission, and storage;

Ø Data changes - changes in data maps and data dictionaries are the implementation of full-process automation and exception monitoring;

2. Effects and benefits

Ø Reduce the emergencies caused by business data quality issues;

Ø Increase the reliability and availability of business data;

Ø Reduce the communication time for operation and maintenance configuration changes, and improve the accuracy of automated implementation of configuration changes (TDW data entry);

Ø Provide better system service support for surrounding systems, such as data analysis and business acceptance;

3. Introduction to the access process

3.1 Overview of Data Monitoring Access

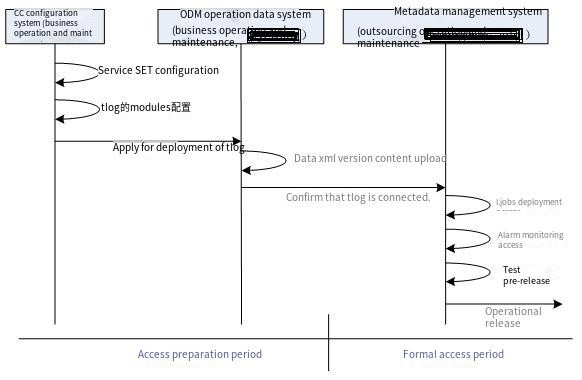

The external systems that data monitoring depends on are the CC Interactive Configuration System and the ODM Operation Data Management Platform System.

3.2 Data monitoring access process

Below is the normal sequence process for businesses to access the iMeta metadata management system:

Data Transmission Service

Data Transmission (DT/TDBank) is a fast and convenient tool for accessing the data warehouse TDW. It has features such as active data pulling, automatic sorting and storage, and real-time monitoring of access status, solving the problem of quickly writing massive data into the data warehouse. At the same time, it has the following characteristics:

Ø Simple configuration

Only three simple configurations are required to complete the data warehouse access;

Ø Automatic storage

According to the configuration, it will automatically pull data and sort it into storage, without manual intervention;

Ø Real-time monitoring

Comprehensively and real-time monitoring of the configuration task status, timely understanding of data access situation;

Ø Powerful features

It can support various types of data sources and provide rich management functions. Supports batch import and export operations, reducing repetitive workload.

Ø Customized historical data supplementation

DT can support customized data supplementation for historical data at a single table level for any time period.

In terms of data collection support, data transmission has covered various IEG game business access methods, mainly including:

1) File reading (Tail method);

2) Message access (TCP/UDP method);

3) Real-time synchronization of MySQL binlog (under testing);

4) HTTP request message;

5) Syslog message.

Data Transmission (DT/TDBank) aims to unify data access entry, provide diverse data access methods, and efficient real-time distributed data distribution, achieving complete data lifecycle management and data services. In short, as long as you tell Data Transmission where the data is, what the data is, and how the data will be used, Data Transmission will automatically complete a whole set of data collection, sorting, and processing processes.

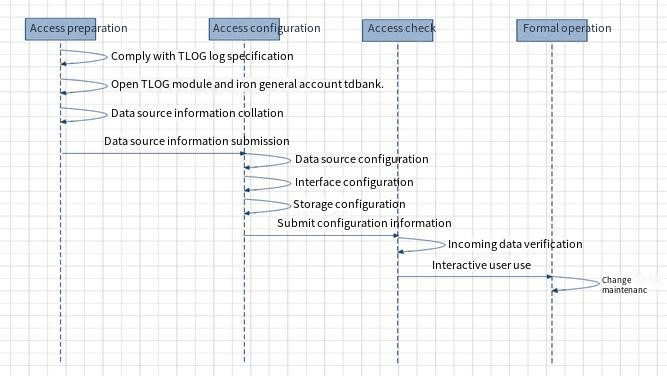

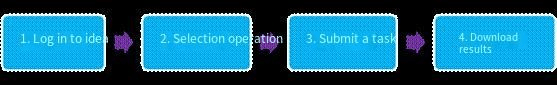

1. Data Access Process

2. Monitoring indicators

Operation and Maintenance Data Extraction Service

Operation and maintenance data extraction mainly involves efficiently extracting corresponding data packets according to the requirements of the business project team, including data sources such as tlog and dr. Personalized data extraction for operations and maintenance, as a supplement to self-service extraction of business ideas, provides personalized, real-time, and game status data extraction. At present, IDEA self-service extraction platform has access to 107 businesses, including: Dungeons and Warriors, Crossing the Line of Fire, Locke Kingdom, League of Legends, Dragon in the Sky, QQ Flying Car, Seven Heroes Competition, QQ Dance, Reverse War, QTalk, QQ Game, AVA, QQ Dance 2, H2, QQ Huaxia, NBA2k, Douzhanshen, QQ Pets, Q Pets' Big Le Dou, Seeking Immortals, QQ Three Kingdoms, QQ Sonic, Xuanyuan Legend, Farm, Woz, War, Tribe Guard Battle, etc. The data management team has completed the daily data demand statistics for a total of 101 businesses connected to the operation management center.

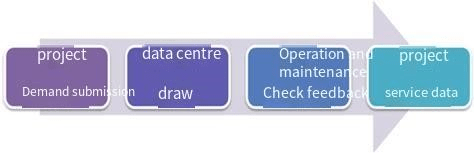

1. Data extraction process

1) Requirement system operation and maintenance extraction

1.1. Colleagues related to the project submit data extraction requirements in the iflow requirements management system, and estimate whether to communicate with the operation and maintenance team in the early stage;

1.2. Colleagues in the data center shall claim the requirements and communicate the details of the requirements with the requester;

1.3. Can the operation and maintenance personnel estimate whether the demand can be extracted through idea self-service and synchronize the information to the business side for the next business side idea self-service extraction;

1.4. When the idea cannot be met, the operation and statistics personnel log in to the idab operation and maintenance data extraction platform to perform data extraction and analysis operations;

1.5. Email or RTX the analysis results to the business requirement submitter, and the process ends.

2) Idea Self Extraction Task

On the business side, you can log in to the self-service extraction platform to extract data. If complex requirements are involved, you can follow the "Requirements System Operation and Maintenance Extraction" process.

3) Extranet activity data extraction process

3.1. Colleagues related to the project submit data statistics requirements in the process system;

3.2. Arrange colleagues from the data center to extract data and provide feedback to the requester;

3.3. The demander shall jointly conduct formula verification with the operation and maintenance center, and conduct spot checks on the data;

3.4. After receiving confirmation from the operation and maintenance team, the demander will use the data and closely monitor the feedback externally and externally.

Basic Operations and Maintenance Services

The basic operation and maintenance services cover the entire operation and maintenance system of the data center, involving resources, operational quality, efficiency, and cost, including platform high availability, remote disaster recovery, monitoring, unified resource application and management, etc. Standardize the business online, operation, and offline processes, and ultimately achieve DO separation.

1. Transfer of operation and maintenance equipment for data analysis projects - More than 400 operation and maintenance data analysis devices are transferred to the data center for operation and maintenance, achieving DO separation and basic operation and maintenance coverage;

2. Improve platform high availability - Complete the removal of single points for key platforms in the center and deploy load balancing scheduling for multiple servers' critical points.

3. Enhance data disaster recovery capabilities - Implement off-site backup for key data storage platforms in the data center (data analysis statistical data, DR files, etc.);

4. Monitoring and alarm construction - 100% service and process monitoring coverage for key platforms in the data center, and 100% basic monitoring coverage for data center equipment.

5. Construction of operation and maintenance daily operation specifications - Operation and maintenance common code access to VSS platform management, improving code and script reuse, reasonably allocating manpower, and maximizing the use of human resources;

6. Equipment virtualization construction - By migrating low-resource utilization and high burst business equipment to a virtualized environment, resource utilization is maximized, and operating costs are reduced.

7. "Iron General" equipment - By accessing the "Iron General" project, centralized management of server accounts, real-name login, minimal permission allocation, strict control of super permissions, and full audit of operations are implemented for the architecture platform;

8. Development and testing environment - By separating platform development, testing, and production environments, standardizing business publishing process standards, and using SVN version control means, development and service quality can be improved.

Conclusion

As an iData basic service component, it provides stable and high-quality data source services for upstream applications, ultimately laying the foundation for refined services for interactive entertainment game businesses. To help everyone better understand the various aspects and platforms of the basic service components, subsequent articles will be introduced one by one in a series.

Intl - English

Intl - English